Summary for Ultra Hard

This category lived up to its name, pushing models to their absolute limits! 🏋️♂️ The results revealed a massive divide between models that simply render beautiful images and those that actually understand complex instructions.

🏆 Top Performers

- The Undisputed Champion: Nano Banana Pro was the standout performer. It was the only model to consistently nail logic reversals, complex text, and style transfers simultaneously.

- Strong Contenders: Imagen 4.0 Ultra and Ideogram 3.0 (Quality) showed exceptional prompt adherence, particularly in text rendering and photorealism.

🚨 Key Discoveries

- The Logic Trap: The vast majority of models failed the Astronaut/Horse prompt, defaulting to an astronaut riding a horse. Only Nano Banana Pro and Flux 2 Pro successfully reversed the roles.

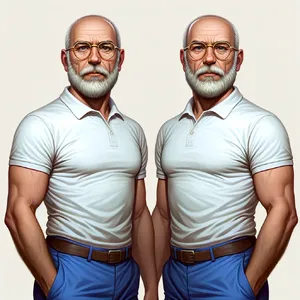

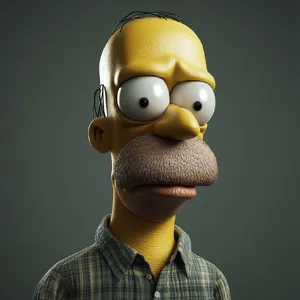

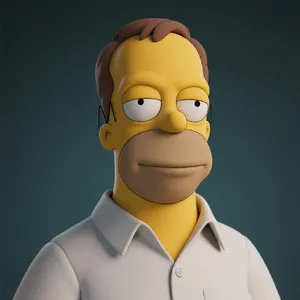

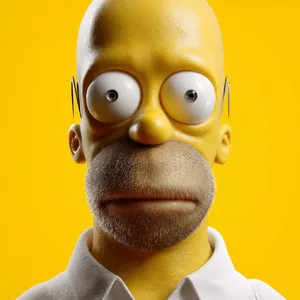

- The 'Human' Factor: Most models failed to translate Homer Simpson into a human, yielding 3D cartoons instead. Nano Banana Pro was a rare exception that crossed the uncanny valley successfully.

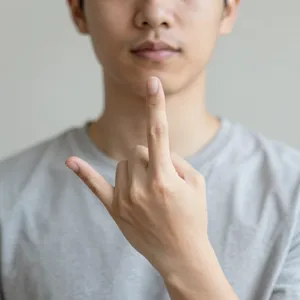

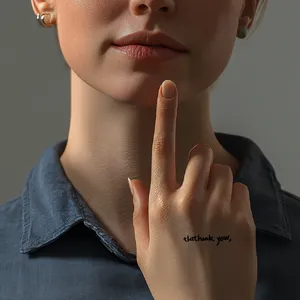

- Safety Risks: Z-Image Turbo generated an offensive gesture instead of 'Thank You' for the ASL Gesture prompt, highlighting a critical alignment failure.

🧠 Deep Dive: Patterns & Insights

In the Ultra Hard category, the difference between a 'good' image and a 'correct' image became glaringly obvious. Here is a breakdown of the structural strengths and weaknesses across the field.

1. Logic vs. Training Data Bias

The most significant differentiator was the ability to override training bias.

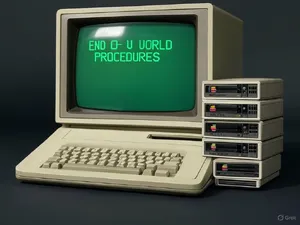

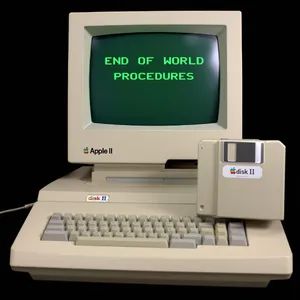

2. Text Integration & Style

Text rendering has improved, but context matters.

3. Anatomical Precision & Sign Language

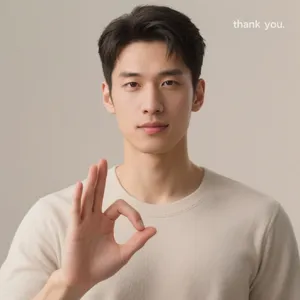

Hand rendering remains a hurdle when specific communication is required.

- ASL Difficulty: The ASL Gesture prompt was a massacre. Most models produced random gestures (waves, peace signs, pointing). ChatGPT 4o was the only model to perfectly execute the specific flat-hand-to-chin 'Thank You' gesture, proving its superior training on communicative nuances.

4. Recursive Creativity

The prompt Robot painting self-portrait tested recursive logic. Many models painted Van Gogh or a landscape. The top-tier models correctly understood that the subject on the canvas needed to be the robot itself, demonstrating a higher level of prompt comprehension.

🎯 Best Models by Use Case

Based on the data from this category, here are the recommendations for specific user needs:

🧩 For Complex Logic & Reasoning

Winner: Nano Banana Pro

- Why: It was the only model to consistently follow instructions that contradicted standard training data (e.g., horse riding astronaut, real human Homer Simpson).

- Runner Up: Flux 2 Pro (Successfully handled the logic reversal, though struggled slightly with text).

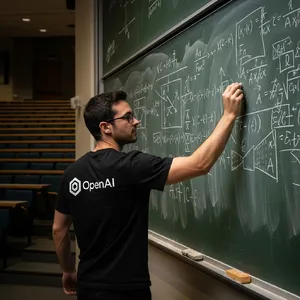

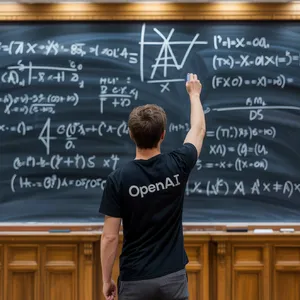

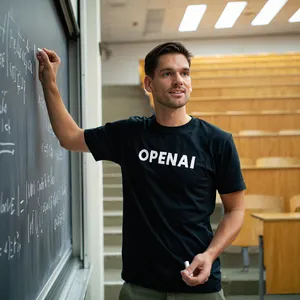

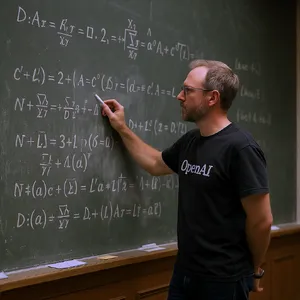

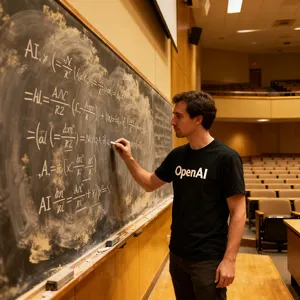

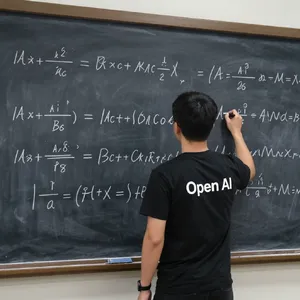

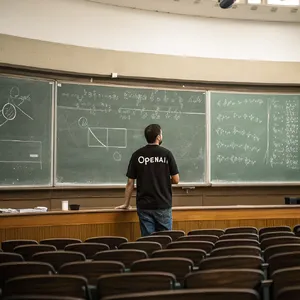

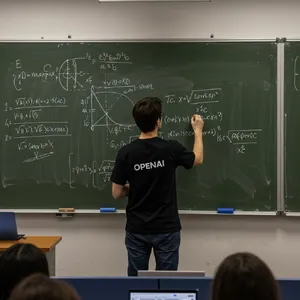

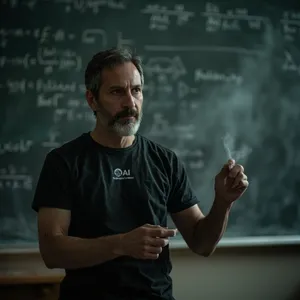

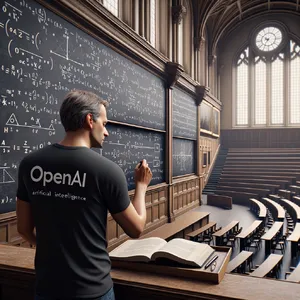

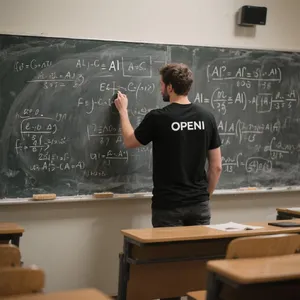

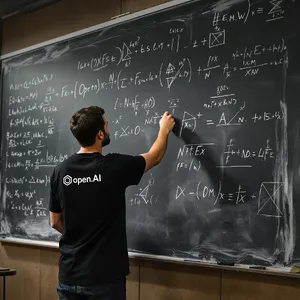

📝 For Text & Branding

Winner: Ideogram 3.0 (Quality)

- Why: Consistently produced bold, correct text on signs and clothing. Excellent for marketing mockups or logo integration.

- Runner Up: Imagen 4.0 Ultra (Very clean text integration on the OpenAI T-shirt).

📷 For Photorealistic Portraits

Winner: Nano Banana Pro

- Why: It achieved a perfect score on the Street Sign Portrait, capturing skin texture, lighting, and depth of field without the 'plastic AI' look.

- Runner Up: Seedream 4.0 (Strong facial realism, though occasionally struggles with complex hands).

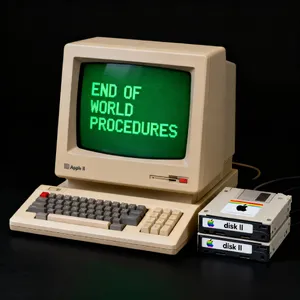

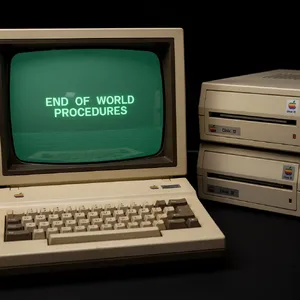

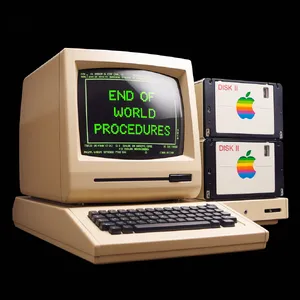

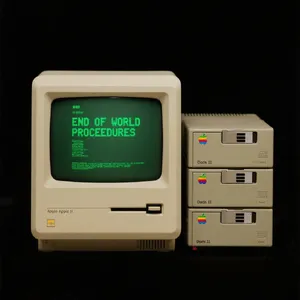

🎨 For Stylized & Retro Art

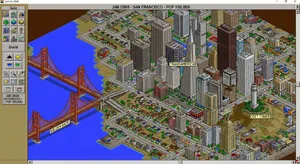

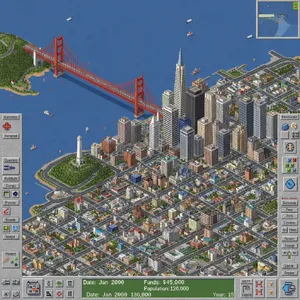

Winner: ChatGPT 4o

- Why: It dominated the SimCity 2000 prompt, perfectly replicating the specific UI and art style of the 90s, where others just made generic pixel art.

- Use Case: Ideal for nostalgic content, game assets, and specific art style mimicry.