Summary

This analysis evaluated 11 AI image generation models across 100 prompts covering 10 diverse categories. Based on the provided overall scores, the top-performing models are:

- 🥇 ChatGPT 4o (Overall Score: 8.11)

- 🥈 Imagen 3.0 (Overall Score: 7.68)

- 🥉 Reve Image (Halfmoon) (Overall Score: 7.50)

Key Discoveries:

- High Overall Quality: Many models consistently produce high-quality, realistic, or stylistically appropriate images, particularly in categories like Architecture & Interiors (Avg: 7.96) and Photorealistic People & Portraits (Avg: 7.73).

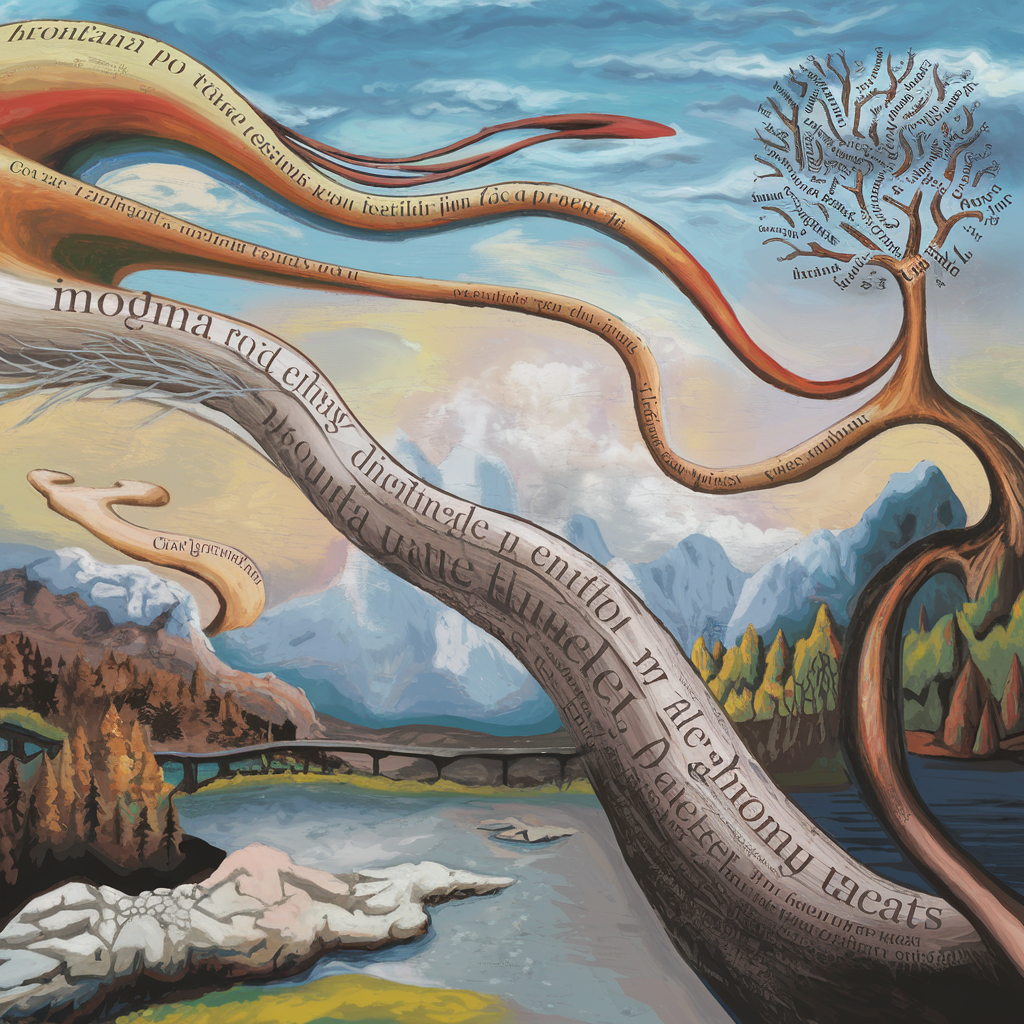

- Text Generation Remains Challenging: Generating accurate and readable text within images is a major hurdle for most models. Gibberish text, misspellings, or incorrect fonts frequently led to significant score deductions across various prompts (e.g., Poster, T-Shirt, Magazine Cover). The Text in Images category had a relatively low average score (6.92).

- Anatomy Issues Persist: While improving, accurate depiction of hands and complex anatomy remains inconsistent, especially in dynamic poses or interactions (e.g., Handshake, Yoga).

- Prompt Adherence Varies: Models sometimes struggle with nuanced prompt requirements, such as specific artistic styles (e.g., Ghibli, SimCity 2000), exact actions (e.g., 'cleaning' vs. 'working' in Hawker), or unusual concepts (e.g., horse riding astronaut in Astronaut/Horse).

- 'Ultra Hard' Category Tests Limits: The Ultra Hard category, designed to push boundaries, saw the lowest average score (5.36), highlighting the difficulty models face with highly complex, nuanced, or absurd prompts.

- Top Performers: ChatGPT 4o and Imagen 3.0 consistently rank high across multiple categories, showing strong versatility. Reve Image (Halfmoon) also performs well, particularly excelling in realism.

General Analysis & Useful Insights

This dataset provides valuable insights into the current state of AI image generation across various models and tasks.

Overall Strengths:

- Photorealism: Many models, including Reve Image (Halfmoon), Imagen 3.0, Recraft V3, Flux 1.1 Pro Ultra, and ChatGPT 4o, demonstrate impressive capabilities in generating photorealistic images, particularly for portraits (Photorealistic People & Portraits), architecture (Architecture & Interiors), and simpler scenes.

- Style Adherence (Broad Strokes): Models are generally good at capturing broader artistic styles like 'anime', 'cartoon', 'cyberpunk', or 'photorealistic'.

- Composition & Atmosphere: Top-tier models often produce images with strong artistic composition, mood, and lighting, going beyond simple prompt fulfillment.

Common Weaknesses & Failure Modes:

- Text Generation: This remains a significant Achilles' heel. Many models produce garbled, misspelled, or nonsensical text, heavily penalizing their scores in relevant prompts like Movie Poster, Magazine Cover, or Billboard. Examples include Flux 1.1 Pro Ultra (link), Google Imagen 3.0 (link), Midjourney v7 (link), and even strong performers occasionally falter (OpenAI DALL-E 3 in Festival).

- Hands & Anatomy: Accurately rendering hands, especially interacting or in complex poses, is inconsistent. Fused fingers, incorrect counts, or awkward positioning are common issues (e.g., DALL-E 3's Handshake, Flux 1.1 Pro Ultra's Android Mona Lisa).

- Specific Style Replication: While general styles are handled well, replicating exact styles like Ghibli style or SimCity 2000 (Pixel Cityscape) proved challenging for many, often resulting in generic interpretations.

- Complex Prompt Interpretation: Models sometimes miss specific nuances or constraints in complex prompts. This includes misinterpreting actions (e.g., 'cleaning' vs. 'cooking' in Hawker), relationships between elements (e.g., skyline forming notes in Musical Skyline), or highly unusual scenarios (e.g., the horse riding the astronaut in Astronaut/Horse was universally missed).

- AI Artifacts: Beyond text and hands, other tells include overly smooth or artificial skin textures (DALL-E 3 in Elderly Woman), uncanny valley faces (Grok 2 Image in Cooking), and unrealistic object interactions or physics (Recraft V3's Smoking Ship).

Distinguishing Factors:

- Consistency: Top models like ChatGPT 4o and Imagen 3.0 show greater consistency across different prompt types and categories.

- Detail & Realism: Models like Reve Image (Halfmoon) and Recraft V3 often excel in producing fine details and textures, enhancing realism.

- Text Handling: Models that manage text better (even if imperfectly) gain a significant advantage in prompts requiring labels, signs, or specific wording. ChatGPT 4o leads here.

- Adherence vs. Creativity: Some models prioritize strict adherence, while others might offer more creative interpretations that sometimes deviate from the prompt. The 'best' approach depends on the user's need.

Best Model Analysis by Use Case / Category

Different models demonstrate strengths in specific areas. Here's a breakdown based on the category performance data:

Recommendations:

- For photorealism, especially portraits and architecture: ChatGPT 4o, Imagen 3.0, Reve Image (Halfmoon), Recraft V3.

- For accurate text: ChatGPT 4o is the clear leader, but check results carefully.

- For anime/cartoon styles: Midjourney V6.1, Imagen 3.0, Ideogram V2.

- For graphic design elements: ChatGPT 4o, Recraft V3, check results for style and text accuracy.

- For creative/surreal prompts: ChatGPT 4o, DALL-E 3, Imagen 3.0.

- For challenging/complex prompts: ChatGPT 4o and Imagen 3.0 tend to perform best but expect potential flaws.