Summary for Complex Scenes

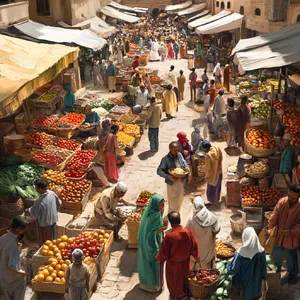

This category proved to be a significant challenge for many models, distinguishing true "world simulators" from simple object generators. The complexity of scenes like a Bustling market scene or a Busy city intersection often exposed limitations in background coherence and text rendering.

Key Findings:

- Top Performer: Nano Banana Pro emerged as the most consistent model, delivering high fidelity in textures and lighting while generally managing complex compositions well.

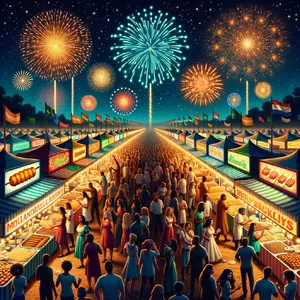

- Rising Star: Ideogram 3.0 (Quality) demonstrated exceptional performance in the latter half of the test, particularly in handling text and crowded scenes like the Nighttime festival.

- The Text Trap: A major trend was high-fidelity models like Flux 1.1 Pro Ultra and Recraft V3 losing significant points due to generating gibberish text on street signs and banners, breaking the realism of otherwise excellent images.

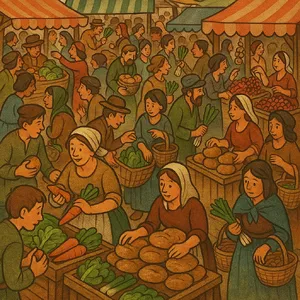

- Style vs. Substance: Models like DALL-E 3 frequently defaulted to stylized, illustrative outputs. While visually pleasing, this often resulted in lower scores when realism was the objective.

General Analysis & Insights

1. The "Crowd Coherence" Challenge

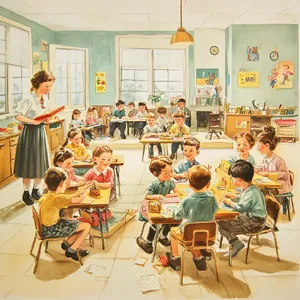

Many models struggled with the "mid-ground" in complex scenes. While foreground subjects were often detailed, background figures in prompts like the School classroom or Bustling market scene frequently dissolved into distorted blobs.

- Strength: Seedream 4.0 and Nano Banana Pro showed the best depth consistency, maintaining acceptable anatomy even for figures further back in the scene.

- Weakness: Recraft V3 and Midjourney v7 often struggled here, with significant degradation of facial features in crowd scenes.

2. Text as a Realism Breaker

In scenes involving urban environments or festivals, the presence of text (signage, banners) became a critical differentiator.

- Failure Mode: Models like Flux 2 Pro and Recraft V3 would generate beautiful lighting and composition but ruin the immersion with prominent gibberish text (e.g., "Vib" or "A USEBY").

- Success: Ideogram 3.0 (Quality) was a standout here, successfully rendering legible text like "DELICIOUS STREET FOOD" in the Nighttime festival, drastically improving its realism score.

3. Prompt List Fatigue

When prompts required three or more distinct activities simultaneously, models often dropped one.

- Example: In the Beach scene, many models successfully rendered volleyball and sandcastles but failed to include the requested "surfers riding waves," or rendered them as generic people in water. Z-Image Turbo and Nano Banana Pro were most reliable at capturing every item on the list.

4. Anatomy in Action

Static portraits are easy; dynamic action is hard. The City intersection prompt revealed that musicians playing instruments often resulted in merged fingers or warped guitars. Imagen 4.0 Ultra and Flux 1.1 Pro Ultra struggled with hand coherence in these complex interactions.

Best Model Analysis by Use Case

📸 Best for Photorealism

Top Pick: Nano Banana Pro

- Why: It consistently delivered the most photographic lighting and texture work, particularly in natural settings like the African savanna and the Market scene. It avoids the "plastic" or "waxy" skin textures that plague other models.

🎨 Best for Composition & Complexity

Top Pick: Ideogram 3.0 (Quality)

- Why: If your complex scene involves text, signage, or specific distinct groups of people, this model is superior. It excelled in the Nighttime festival by integrating coherent text with a complex crowd scene.

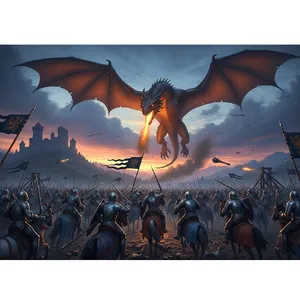

🐉 Best for Fantasy & Drama

Top Pick: DALL-E 3 / Seedream 4.0

- Why: While DALL-E 3 struggled with strict realism scores, it (and Seedream 4.0) produced the most dynamic and "epic" compositions for the Medieval battlefield. If the goal is visual impact rather than photo-accuracy, these are strong choices.

📊 Best All-Rounder for Prompt Adherence

Top Pick: Z-Image Turbo

- Why: This model rarely "forgot" an element. Whether it was the Astronaut and deep-sea diver or the specific activities in the School classroom, it reliably included all requested subjects, making it a safe bet for complex, multi-part prompts.