Summary for Text in Images

This analysis dives into how well different AI models handle generating text within images – a notoriously tricky task!

Key Findings:

- Top Performers: 🏆 ChatGPT 4o emerged as the most reliable model in this category, consistently producing accurate text and adhering well to prompts. MiniMax Image-01 and Recraft V3 also performed strongly with relatively few text errors.

- Common Challenge: Gibberish! Many models struggled, especially with complex layouts like posters (Movie Poster) or magazine covers (Magazine Cover), often filling secondary text areas with nonsensical characters. Models like Imagen 3.0 and Flux 1.1 Pro Ultra were particularly prone to this.

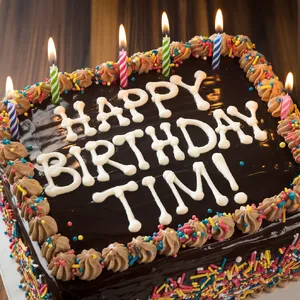

- Simple is Safer: Models generally found it easier to render simple, clear text like on the Cake Icing, Stop Sign, or Digital Clock prompts compared to stylized or multi-field text.

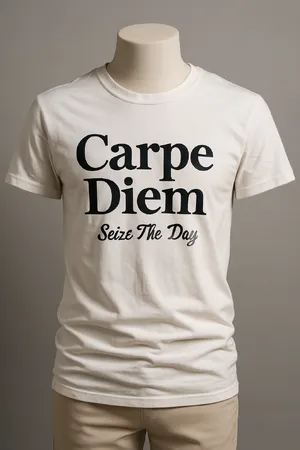

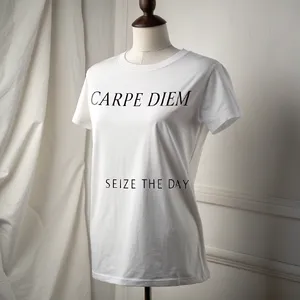

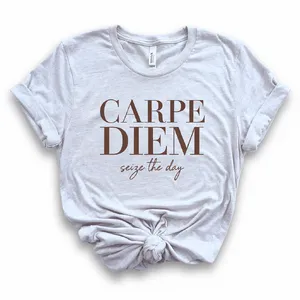

- Font Styles Matter: Adhering to specific font requests (e.g., script vs. serif on the T-shirt) was inconsistent across models.

- Notable Underperformer: 📉 Midjourney v7 showed significant weakness in this category, frequently failing prompts due to severe text errors.

Quick Conclusion: For reliable text generation, ChatGPT 4o is the current leader. Always verify generated text, especially for complex designs or specific font requirements.

General Analysis & Useful Insights for Text in Images

The "Text in Images" category highlights a critical area where AI image generators often struggle, making it a key differentiator between models.

Key Observations:

- Text Accuracy is Paramount: Unlike other categories where aesthetic appeal might compensate for minor prompt deviations, incorrect text often renders an image unusable for its intended purpose. Models scoring poorly here frequently did so primarily because of text errors (misspellings, gibberish), even if the image was otherwise high quality. See the low scores for otherwise capable models like Midjourney v7 on the Movie Poster or Magazine Cover.

- Gibberish is Common: A frequent failure mode, especially in layouts requiring multiple text fields (like posters or magazine covers), is the generation of nonsensical placeholder text or completely garbled characters. This was observed across several models, including Imagen 3.0, Flux 1.1 Pro Ultra, DALL-E 3, and notably Midjourney v7.

- Font Styles are Challenging: Adhering to specific font style requests (e.g., serif vs. script in the T-shirt prompt) proved difficult for some models like DALL-E 3 and MiniMax Image-01, while others like ChatGPT 4o and Ideogram V2 handled it better.

- Context Matters: Models generally performed better with simple, common text prompts like a Stop Sign or Digital Clock than with more complex, creative text requirements like movie titles or slogans integrated into designs.

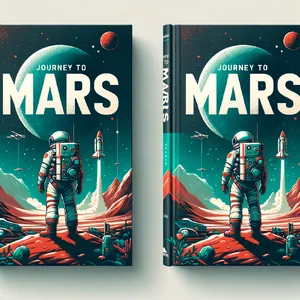

- Spelling & Minor Errors: Beyond complete gibberish, simple misspellings (e.g., 'Seze' instead of 'Seize' by Midjourney v7 on the T-shirt) or fused words ('JOURNEYTOMARS' by Grok 2 Image on the Book Cover) were also observed.

- Strong Models Emerge: Despite the challenges, some models consistently demonstrated better text generation capabilities. ChatGPT 4o and MiniMax Image-01 had the highest average scores and relatively fewer critical text errors. Recraft V3 and Reve Image (Halfmoon) also performed commendably.

- Surprising Underperformance: Midjourney v7 performed exceptionally poorly in this category, with text errors plaguing 9 out of its 10 generations, dragging down its average score significantly.

Insight: Generating accurate text is not just about spelling; it involves understanding context, layout conventions (like credits on posters), and font styles. Models that struggle here may be less suitable for graphic design, marketing materials, or any application where text is a core component.

Category Analysis: Text in Images

This category specifically tests the ability of AI models to generate accurate, legible, and stylistically appropriate text within images. This is a challenging task, often separating the top models from the rest.

Use Cases & Recommendations:

-

Simple, Clear Text (Signs, Labels, Short Messages):

-

Stylized Text & Font Specification:

-

Complex Layouts (Posters, Covers, Billboards with Multiple Text Fields):

- High Risk of Error: This is where many models falter, often producing gibberish, especially for smaller text like credits or subheadings.

- Frequent Failures: Midjourney v7, Imagen 3.0, Flux 1.1 Pro Ultra, and DALL-E 3 struggled significantly with prompts like the Movie Poster and Magazine Cover, producing nonsensical text despite often getting the main title right.

- Better Performance: ChatGPT 4o, Recraft V3, and MiniMax Image-01 generally handled these complex prompts better, though occasional errors still occurred (e.g., ChatGPT 4o included extraneous prompt text on the Movie Poster). Ideogram V2 also did well on the Movie Poster.

- Recommendation: Exercise caution. Simpler text prompts are more reliable. If complex layouts are needed, start with ChatGPT 4o, Recraft V3, MiniMax Image-01, or Ideogram V2, but anticipate potential errors and iterate.

-

Text Integrated with Realistic Scenes:

- Successful Integration: Top models like ChatGPT 4o, MiniMax Image-01, Midjourney V6.1, and Recraft V3 often did well integrating text plausibly onto surfaces like billboards (Times Square Billboard), signs (Neon Sign), or products (T-shirt - when text was correct).

- Challenges: Some models struggled with realistic text perspective or wrapping on wrinkled surfaces, though this category showed good performance overall on physical integration when the text itself was correct.

- Recommendation: Most leading models can integrate text well visually; the primary challenge remains text accuracy.

Overall: For tasks heavily reliant on text accuracy within images, ChatGPT 4o and MiniMax Image-01 currently offer the best reliability based on this dataset. However, even top models can fail, especially with complex prompts. Always double-check generated text!